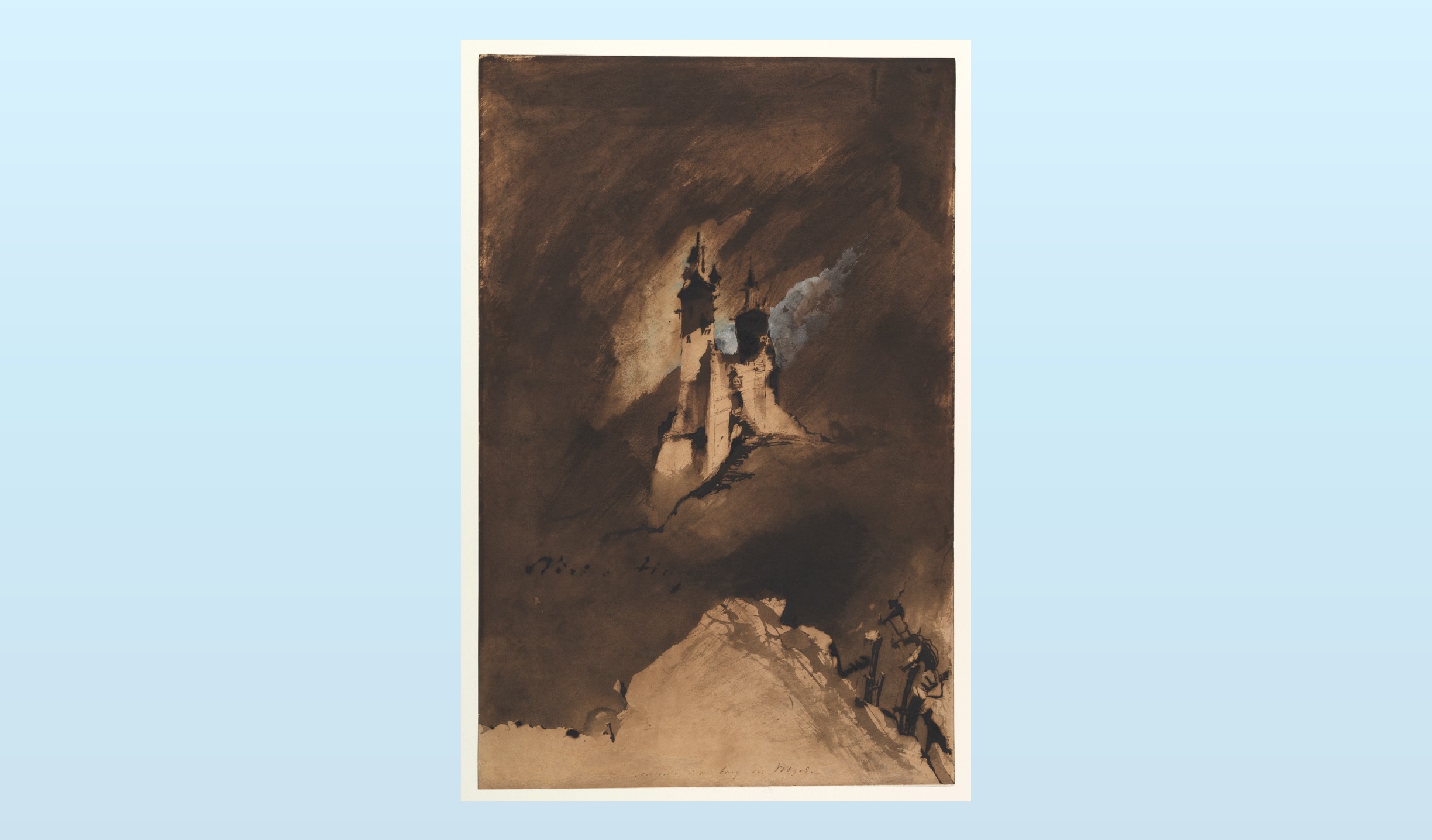

Building Castles in the Air, but With Surprise Physics

In the software engineering classic, “The Mythical Man-Month,” Frederick P. Brooks Jr. wrote:

The programmer, like the poet, works only slightly removed from pure thought-stuff. He builds his castles in the air, from air, creating by exertion of the imagination. Few media of creation are so flexible, so easy to polish and rework, so readily capable of realizing grand conceptual structures.

Yet the program construct, unlike the poet’s words, is real in the sense that in moves and works, producing visible outputs separate from the construct itself. It prints results, draws pictures, produces sounds, moves arms. The magic of myth and legend has come true in our time. One types the correct incantation on the keyboard, and a display screen comes to life, showing things that never were nor could be.

I used to see this quote more often. It was frequently cited by developers during the period after the dot-com bust, when the iPhone kicked off the smartphone boom and the internet and social media became normal. Suddenly, the real-world impact of programmers was everywhere, experienced by seemingly everyone.

In 2012, just prior to their IPO, Meta CTO Andrew Bosworth had it printed on his business card1.

The Probabilistic Nature of Building Atop AI

During recent conversations with Jeff Huber and Jason Liu, we touched on the probabilistic nature of building atop AI.

Randomness is built into LLMs (they even expose a parameter to tweak it) and our agents, applications, and pipelines must account for the unexpected. This is different than the programming of the past decades. It’s a workflow more akin to that of data science, where you form hypotheses, design experiments, and rapidly iterate until you’re on (relatively) stable ground.

Or, as Jeff put it, “People who are good at AI are used to getting mugged by the fractal complexity at reality.”

Just yesterday, Gian Segato wrote an excellent piece exploring this exact shift:

We are no longer guaranteed what x is going to be, and we’re no longer certain about the output of y either, because it’s now drawn from a distribution…

Stop for a moment to realize what this means. When building on top of this technology, our products can now succeed in ways we’ve never even imagined, and fail in ways we never intended.

This is incredibly new, not just for modern technology, but for human toolmaking itself. Any good engineer will know how the Internet works: we designed it! We know how packets of data move around, we know how bytes behave, even in uncertain environments like faulty connections. Any good aerospace engineer will tell you how to approach the moon with spaceships: we invented them! Knowledge is perfect, a cornerstone of the engineering discipline. If there’s a bug, there’s always a knowable reason: it’s just a matter of time to hunt it down and fix it.

You should grab a coffee and read the whole essay.

As someone who would never call themselves an engineer, that last line felt true to me. AI development feels more akin to science (where we poke things and note how they work) than engineering (where we build structures with documented parameters).

But then a Hacker News user named “potatolicious” wrote this comment, on a thread related to my AI job title guide:

Most classical engineering fields deal with probabilistic system components all of the time. In fact I’d go as far as to say that inability to deal with probabilistic components is disqualifying from many engineering endeavors.

Process engineers for example have to account for human error rates. On a given production line with humans in a loop, the operators will sometimes screw up. Designing systems to detect these errors (which are highly probabilistic!), mitigate them, and reduce the occurrence rates of such errors is a huge part of the job.

Likewise even for regular mechanical engineers, there are probabilistic variances in manufacturing tolerances. Your specs are always given with confidence intervals (this metal sheet is 1mm thick +- 0.05mm) because of this. All of the designs you work on specifically account for this (hence safety margins!). The ways in which these probabilities combine and interact is a serious field of study.

Software engineering is unlike traditional engineering disciplines in that for most of its lifetime it’s had the luxury of purely deterministic expectations. This is not true in nearly every other type of engineering.

If anything the advent of ML has introduced this element to software, and the ability to actually work with probabilistic outcomes is what separates those who are serious about this stuff vs. demoware hot air blowers.

I’ll be thinking about this for quite some time.

Omar Khattab pointed out this isn’t entirely new:

Any software systems that made network requests had these properties. Honestly, any time you called a complex function based on its declared contract rather than based on understanding it procedurally you engaged in the kind of reasoning needed to build AI systems.

This is true. But I argue that simulating network issues and designing concurrent systems is a step or two down from the variability of AI models. Further, these existing issues were never the dominant trend in the software engineering industry. Many developers just offloaded these challenges or avoided dealing with them.

Further, for each new app you need to understand the probabilistic fingerprint of that domain, for a given model. The uncertainty is a moving target, which has to be discovered every time.

Updating Brooks’ Quote for Applied AI

Perhaps we should update the Fred Brooks quote, for those building atop AI: programmers still build castles in the air, but the first have to discover what physics apply.

-

It may have been there earlier and/or later, that’s just when I saw it. ↩