Making Sense of AI Job Titles

A Cheat Sheet for Job Titles in the AI Ecosystem

Even when you live and breathe AI, the job titles can feel like a moving target. I can only imagine how mystifying they must be to everyone else.

Because the field is actively evolving, the language we use keeps changing. Brand new titles appear overnight or, worse, one term means three different things at three different companies.

This is my best attempt at a “Cheat Sheet for AI Titles.” I’ll try to keep it updated as the jargon shifts, settles, or fades away. As always, shoot me a note with any additions, updates, thoughts, or feedback.

The AI Job Title Decoder Ring

While collecting examples of titles from job listings, Twitter bios, and blogs, a pattern emerged: nearly all AI job titles are created by mixing-and-matching a handful of terms. Organizing the Post-Its on my wall, I was reminded of “mix-and-match” children’s books:

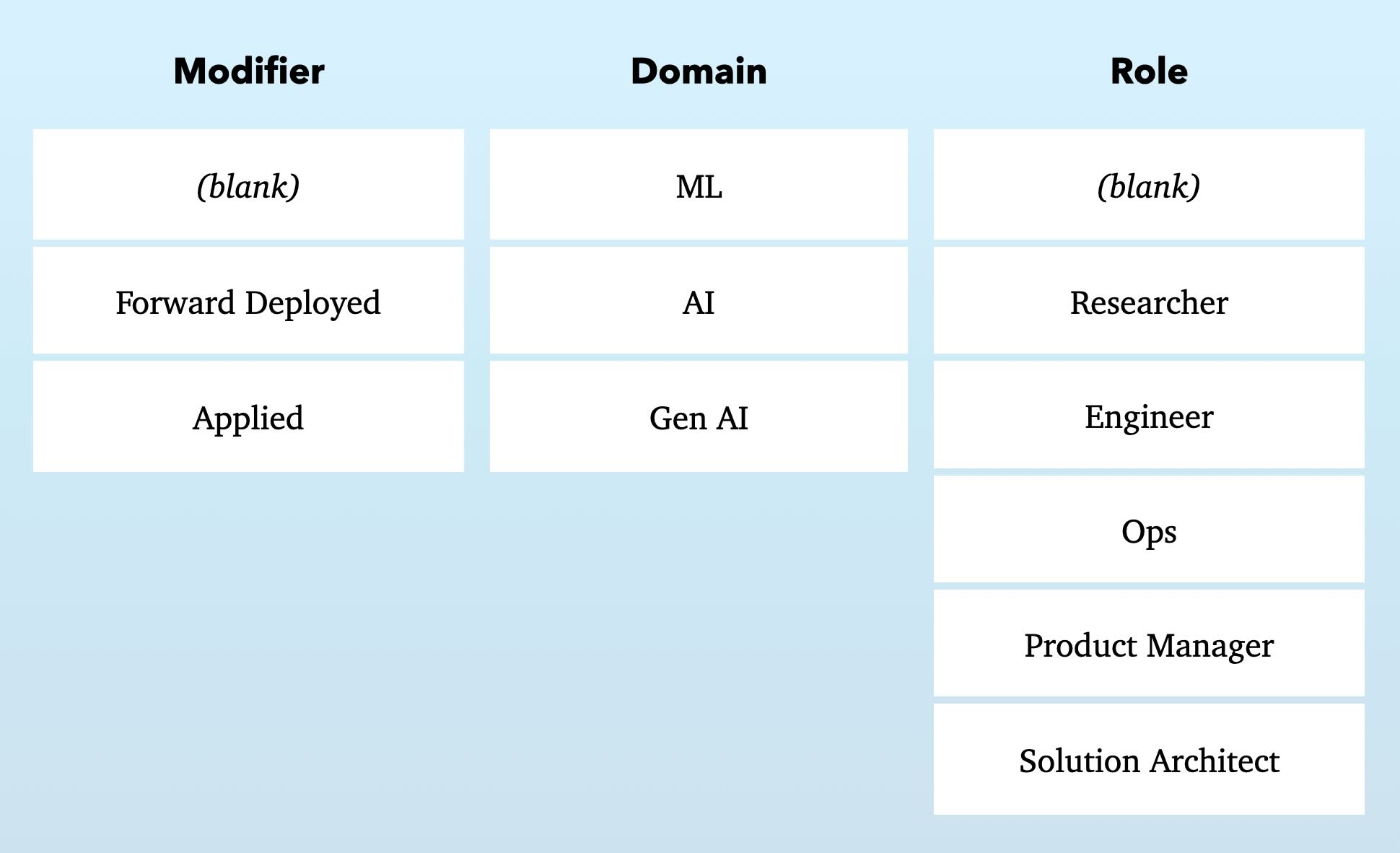

If we swap out the dinosaur parts above with the adjectives and nouns from my collected examples, we get:

Sliding these columns up and down, we can assemble most AI job titles. (Though I have yet to see some combinations, like, “Applied AI Ops”.)

Let’s first break down the modifiers:

- Forward Deployed: People who work closely with customers, helping them develop new applications powered by their own company’s technologies. They learn their customer’s business, constraints, and goals, then translate that context directly into features, integrations, and working code.

- Applied: People who conceive, design, support, and/or build products and features powered by AI models. The key here is that they are applying AI to a domain problem; they are not helping build the AI itself.

There is plenty of overlap here: most Forward Deployed workers are working on Applied problems. They usually aren’t training new models with the customer.

The domain column is rather awkward, mostly for historical reasons.

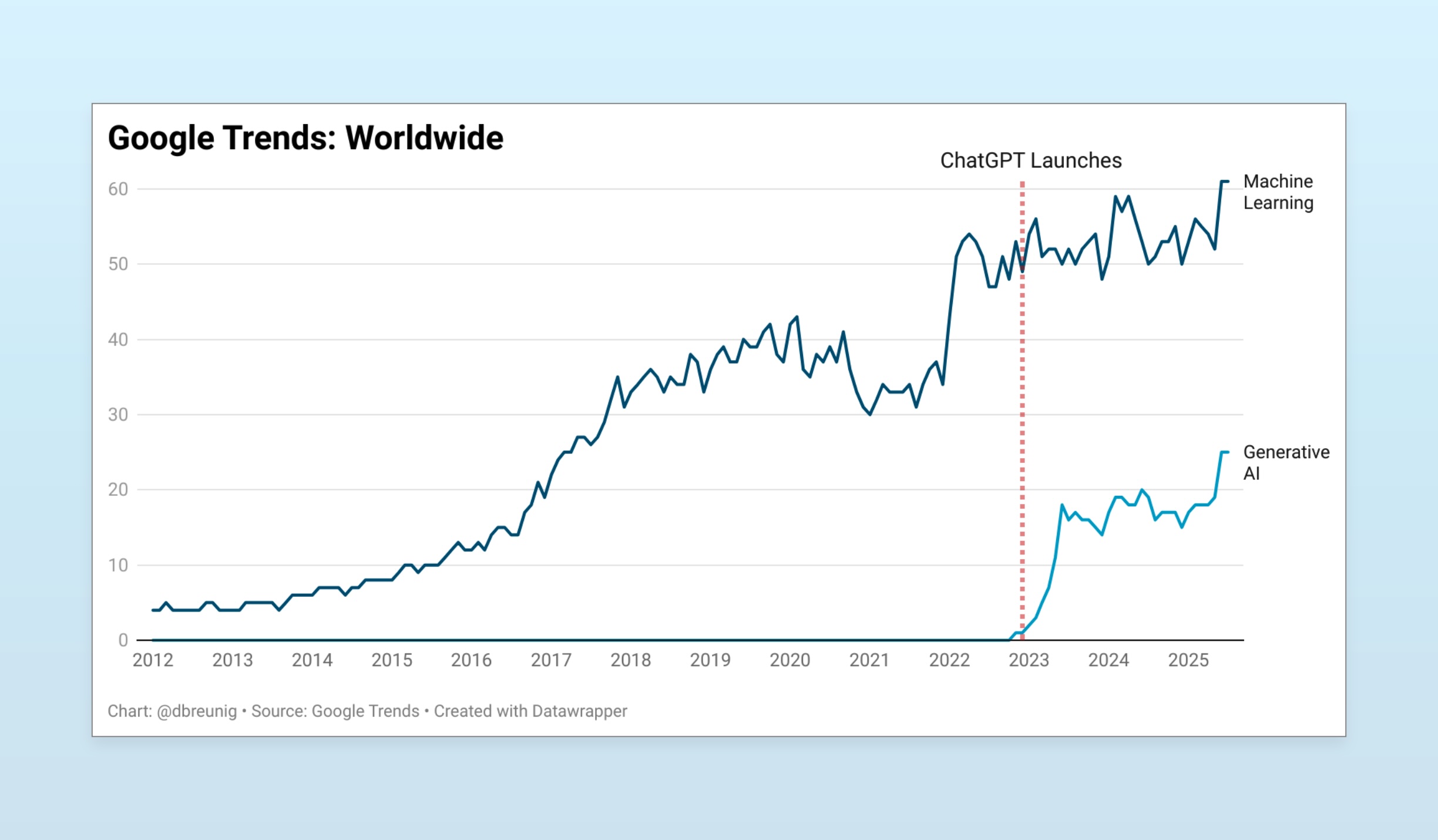

The terms “ML” and “Gen AI” are subsets of the broader “AI” domain. “Gen AI” as a term only arrived after ChatGPT launched, as a way to distinguish the now-famous chatbots and image generation from everything else people with “AI” titles had been working on prior to September, 20221.

While initially coined to cordon off text and image generation applications, I think “Gen AI’s” utility is waning. LLMs are being used for non-generative applications – like categorization, information gathering, comparisons, data extraction, and more – that were traditionally the domain of what we used to call “machine learning” and “deep learning”2.

That said, when you see these domains in a title, here’s how you should interpret them:

- AI: A general, catch-all domain for people working in AI. Encompasses text processing, agent building, image generation systems, chatbots, LLM training, and so much more. This is the default for this field.

- ML: ML signifies this role will be focused on training models – most likely not LLMs – for single-purpose tasks, that will be used as a function in a larger pipeline or app. Examples of these single-purpose tasks include recommendation systems, anomaly detection, predictive analytics, and data extraction or enrichment.

- Gen AI: This domain signals that the role will involve working with text, image, audio, or video generation models. This role usually involves applications where the model output is directly consumed by the user. Examples of these applications include writing tools and image generators and editors.

The suffixes are mostly self explanatory, with one exception: researcher.

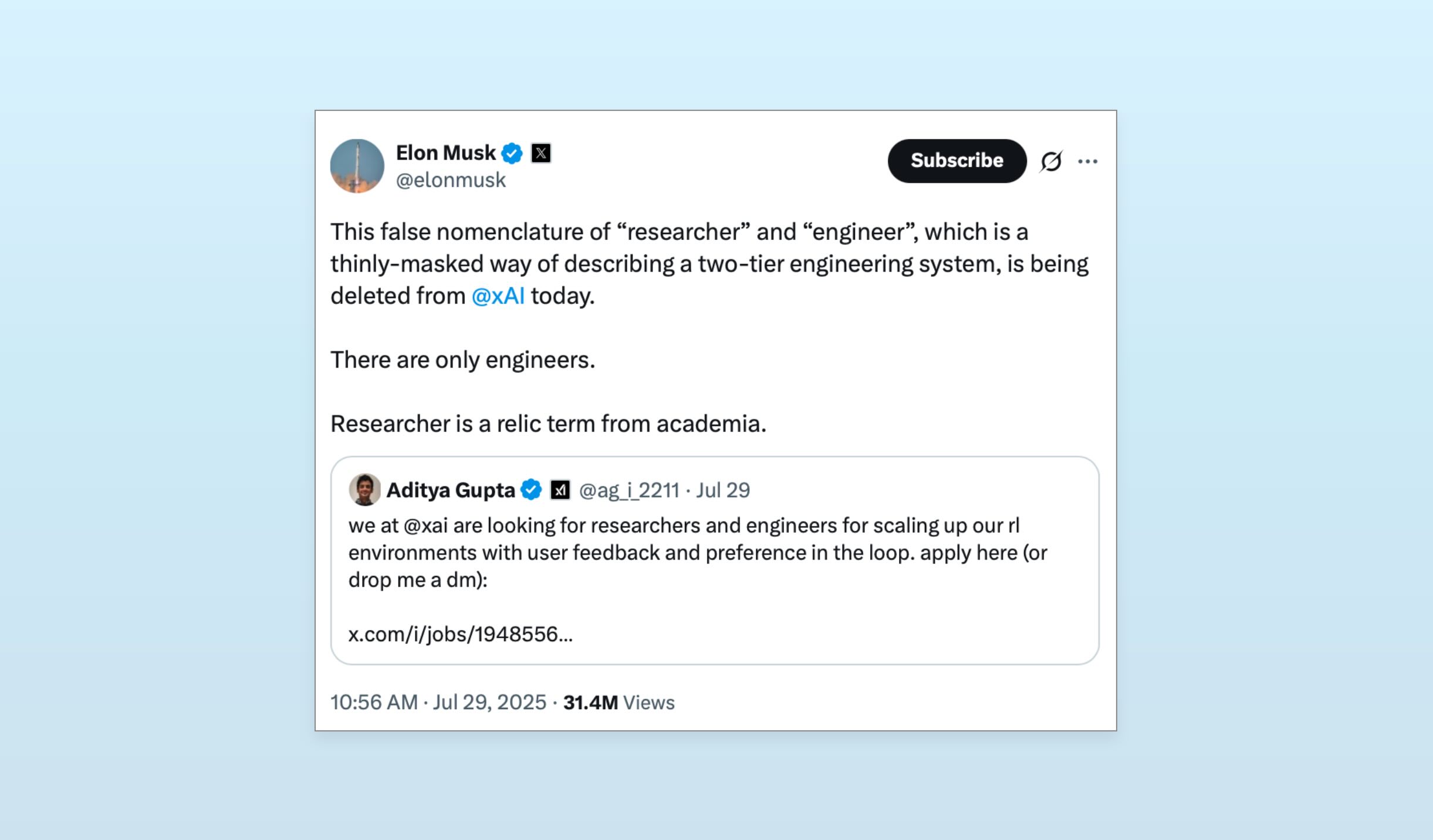

I agree with the above take.

Prior to ChatGPT, most people working on AI research and development were at universities. When private projects began standing up AI efforts, the terms “researcher” and “lab” were borrowed from academia. At first, this made sense: the work was exploratory and speculative, more akin to big science projects than product development. But as AI became a product, a business, the term “researcher” remains but is increasingly awkward.

“Researcher” is a title used inconsistently. I have met “researchers” with product OKRs and incentives tied to business goals. I have met “researchers” who are working on novel LLM architectures and “researchers” who are building applications atop existing models. I have met “researchers” who are doing, well, research: exploratory work where it’s okay if a hypothesis doesn’t pan out, so long as you’re learning. Tension behind the term is increasing, hence the Elon post above.

Adding to the confusion is you’ll often see the term “Scientist” in place of “Researcher”. As far as I can tell, based on job descriptions, these terms are largely interchangeable.

Examples of AI Job Titles

Below is a handful of illustrative, real-world job titles. This list is in no way exhaustive. The goal here is to demonstrate how the modifiers, domains, and roles are assembled so we can better decode titles when we encounter them in the wild.

Example Titles

AI Researcher

An AI Researcher forms hypotheses, designs and runs experiments to test their hypotheses, then shares their learnings (sometimes publically) in pursuit of advancing the development of AI models. Often, they’re involved in productizing their findings.

Perhaps the most discussed job title of late, thanks to Meta’s aggressive hiring, leading to a surge of interest.

Here’s job description for a “Research Scientist” from OpenAI:

As a Research Scientist here, you will develop innovative machine learning techniques and advance the research agenda of the team you work on, while also collaborating with peers across the organization. We are looking for people who want to discover simple, generalizable ideas that work well even at large scale, and form part of a broader research vision that unifies the entire company.

Requirements for the job include:

- “Have a track record of coming up with new ideas or improving upon existing ideas in machine learning, demonstrated by accomplishments such as first author publications or projects.”

- “Possess the ability to own and pursue a research agenda, including choosing impactful research problems and autonomously carrying out long-running projects.”

Interestingly, this job posting has been active, unchanged, since March of 2023.

Sometimes you’ll see this role listed as a “Research Scientist.”

Applied AI Engineer

An Applied AI Engineer develops applications and features that utilize AI models.

Here’s a job description for a Senior Applied AI Engineer from Google DeepMind:

We are seeking a Senior Applied AI Engineer to lead the development and deployment of novel applications, leveraging Google’s generative AI models. This role focuses on rapidly developing new features, and working across partner teams to deliver solutions, and maximize impact for Google and top customers. You will be instrumental in translating cutting-edge AI research into real-world products, and demonstrating the capabilities of latest-generation models. We are looking for engineers with a strong track record of building and shipping AI-powered software, ideally with experience in early-stage environments where they have contributed to scaling products from initial concept to production. The ideal candidate will be motivated by the opportunity to drive product & business impact.

Note the focus on applying AI technology, not developing it. If we were to drop the “Applied” title, we might find an “AI Engineer” working on producing the models themselves.

Applied AI Solution Architect

Swapping out the role from “Engineer” to “Solution Architect” yields a predictable definition.

An Applied AI Solution Architect helps customers and potential customers design and ideate features and applications powered by AI models.

Here’s a recent job description from Anthropic:

As an Applied AI team member at Anthropic, you will be a Pre-Sales architect focused on becoming a trusted technical advisor helping large enterprises understand the value of Claude and paint the vision on how they can successfully integrate and deploy Claude into their technology stack. You’ll combine your deep technical expertise with customer-facing skills to architect innovative LLM solutions that address complex business challenges while maintaining our high standards for safety and reliability.

Working closely with our Sales, Product, and Engineering teams, you’ll guide customers from initial technical discovery through successful deployment. You’ll leverage your expertise to help customers understand Claude’s capabilities, develop evals, and design scalable architectures that maximize the value of our AI systems.

If you successfully sell a client on a business case for a feature, you might call in our next role…

AI Forward Deployed Engineer

An AI Forward Deployed Engineer (FDE) is a professional services role that helps customers impliment AI-powered applications and featured.

After claiming rapidly-iterating AI will companies will squeeze out incumbents like Salesforce, a16z backtracked and heralded FDEs as critical roles needed for enterprise AI adoption: “Enterprises buying AI are like your grandma getting an iPhone: they want to use it, but they need you to set it up.”

For the irony’s sake, here’s a recent AI Forward Deployed Engineer role at Salesforce:

We’re looking for a highly accomplished and senior-level Forward Deployed Engineer with 5+ years of experience to lead the charge on complex AI agentic deployments. This role demands a seasoned technologist and strategic partner who can not only design and develop bespoke solutions leveraging our Agentforce platform and other cutting-edge technologies but also lead technical engagements and mentor junior peers. You’ll be the primary driver of transformative AI solutions, operating with deep technical mastery, unparalleled problem-solving prowess, and a relentless focus on delivering tangible value in dynamic, real-world environments, from initial concept to successful deployment and ongoing optimization.

As a Forward Deployed Engineer, you’ll be at the forefront of bringing cutting-edge AI solutions to our most strategic clients. This isn’t just about coding; it’s about deeply understanding our customers’ most complex problems, architecting sophisticated solutions, and leading the end-to-end technical delivery of innovative, impactful solutions that leverage our Agentforce platform and beyond.

Emphasis mine. Rapidly acquiring domain expertise is key for this role.

We’ve recently written about Forward Deployed Engineers – why they’re necessary and how they signal AI-assisted coding’s impact on product management.

AI Engineer

Remove the “Forward Deployed” and we have a signficantly different job. Nailing this title down is difficult, it’s somehow more vague than even “Researcher” titles, ranging the gamut from “Applied” work to foundational model building. This squishiness is explored well by Latent Space, in a 2023 piece, “The Rise of the AI Engineer.” They write:

I think software engineering will spawn a new subdiscipline, specializing in applications of AI and wielding the emerging stack effectively, just as “site reliability engineer”, “devops engineer”, “data engineer” and “analytics engineer” emerged.

The emerging (and least cringe) version of this role seems to be: AI Engineer.

Every startup I know of has some kind of #discuss-ai Slack channel. Those channels will turn from informal groups into formal teams, as Amplitude, Replit and Notion have done. The thousands of Software Engineers working on productionizing AI APIs and OSS models, whether on company time or on nights and weekends, in corporate Slacks or indie Discords, will professionalize and converge on a title - the AI Engineer.

The entire piece is worth a read, though with the advantage of hindsight, their definition of “AI Engineering” seems very broad. As defined in their post, everything besides “Research”, “Product Manager”, and “Solution Architect” could fit within their definition.

The emergence of the “Applied” modifier has tightened this domain and is being leaned on more. I suspect “AI Engineering” will persist as a big-tent term for conferences and communities, but “Applied” roles will be the corporate title.

Try to search for “AI Engineering” titles and you’ll find jobs that are “Applied” roles; roles that build apps atop AI models, not build the models themselves. At the big labs, “AI Engineering” titles don’t exist on their career pages. For them, “Engineering” roles are specific to a domain, like performance, tokenization, infrastructure, or inference.

If you run into any interesting titles that make or break the decoder ring above, please do share them with me. As novel ones float by, I may grab them and update the examples above.

-

The term “Generative AI” is a pet peeve of mine. A weird theory of mine is that the term was coined by people running “AI” departments in large companies and consultancies. Upon seeing ChatGPT, their bosses or customers suddenly remembered they had people working on “AI” and promptly called them up, asking why they hadn’t made anything like ChatGPT. “AI is a big domain!” I imagine the AI departments replied. “ChatGPT is actually a subfield of AI called generative AI. We, too, can work on that if you want.” ↩

-

A decade ago, the terms “machine learning” and “deep learning” were inconsistently used. When writing about a topic that applied to both, we’d all lean on “ML/DL” or similar composites to fend off the pedants in the comments section. Or just include notes about usage up front. ↩