A Plea for Sober AI

The hype is so loud we can’t appreciate the magic

The OpenAI and Google I/O product announcements are over, and the hype hangovers are being nursed.

We got drunk on big claims and now we’re sobering up to the reality of the products.

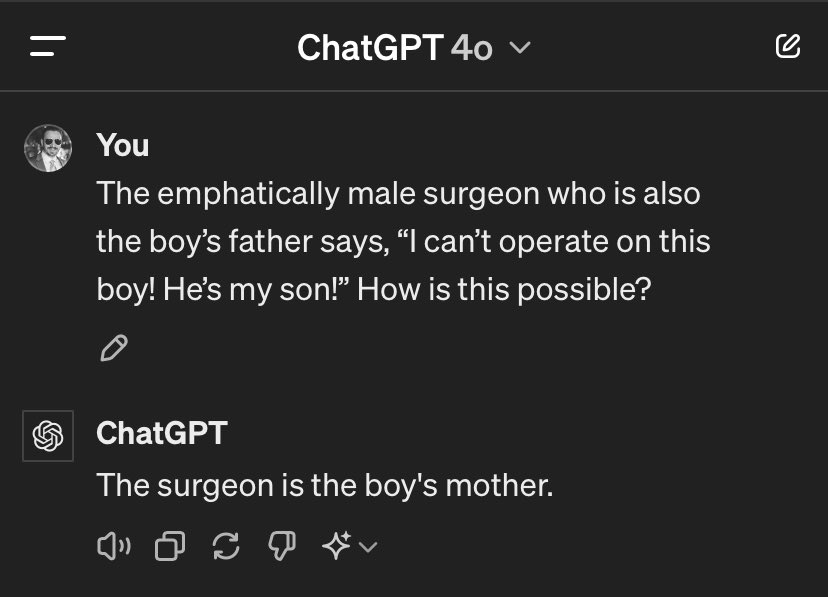

Riley Goodside, staff prompt engineer at Scale.ai, quickly embarrassed GPT-4o once it hit ChatGPT:

Not quite in line with the expectations set by OpenAI’s demo!1

Google was no better.

In The Verge, Alex Cranz lamented Google’s avoidance of hallucinations, despite several occuring during the keynote. The only acknowledgement of AI’s limitations were the smallest disclaimers at the bottom of some slides. The disclaimer is so small it’s unreadable in screencaps! I’ve circled it in red:

Cranz writes:

During Google’s IO keynote, it added, in tiny gray font, the phrase “check responses for accuracy” to the screen below nearly every new AI tool it showed off — a helpful reminder that its tools can’t be trusted, but it also doesn’t think it’s a problem. ChatGPT operates similarly. In tiny font just below the prompt window, it says, “ChatGPT can make mistakes. Check important info.”

So why is this hype bad? Why can’t we wallow in the promise of AI for a few days? What’s wrong with pretending these carefully managed demos aren’t casual one-take affairs?

The hype is so loud it washes out the true magic of these products.

Oddly, the best example of this is from another of those hype hangover articles, this time Julia Angwin’s piece in The New York Times Opinion section, “Press Pause on the Silicon Valley Hype Machine.” In it she writes:

The reality is that A.I. models can often prepare a decent first draft. But I find that when I use A.I., I have to spend almost as much time correcting and revising its output as it would have taken me to do the work myself.

Read that again. And again, until the absurdity of it sinks in.

We have software that can write a “decent first draft” in a few seconds, for free or for a few cents, and we’re disappointed.

Thanks to the constant hype – from OpenAI, Google, and countless other companies and boosters2 – we’re disappointed.

Imagine having products THIS GOOD and still over-selling them.

We’re constantly teased with the promise of AGI, shown flawless demos of assistants that can do anything, and continually served up empty text-box products hooked up to general models that claim to handle anything we can throw at them. The difficulties and challenges of promting is hidden and hallucinations are never mentioned. The end result is we train users to dangerously trust whatever AI slop they’re presented or we train them to dismiss the whole field.

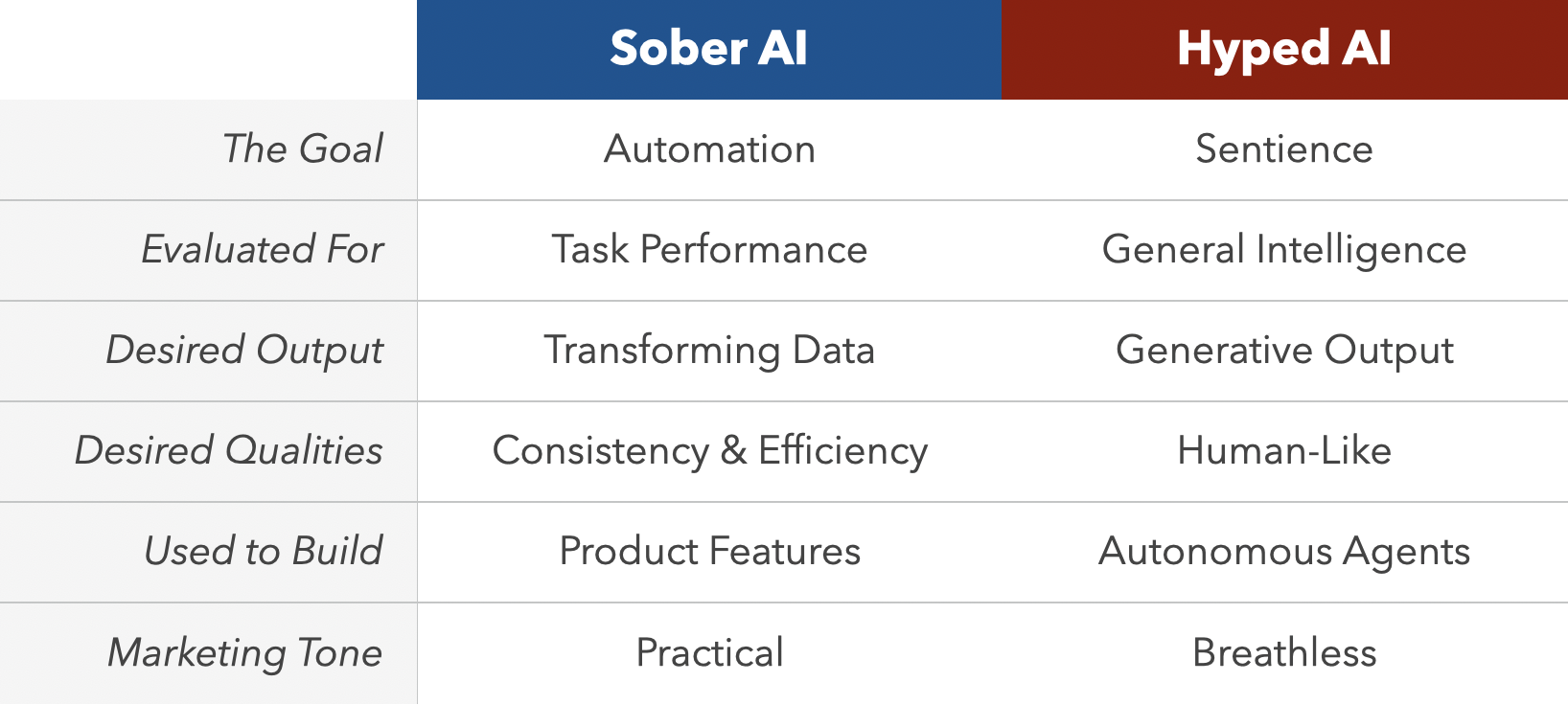

Below all this hype, there’s a quiet revolution happening. I keep meeting new companies and seeing new products that make practical use of LLMs. They use them to solve narrow problems and prioritize consistency and efficiency over giant, all-singing, all-dancing models. I keep meeting people who are amplifying their capacity and abilities by handing simple, mundane tasks to AIs, which they then refine and improve. AI-assisted coding has been a boon for early-stage start ups, which are shipping products with a maturity and polish beyond their years.

This is the world of Sober AI.

I don’t think we’re going to hit AGI anytime soon. I think those who think GPT-5 is going to be a leap as significant as GPT-2 to GPT-3, let alone GPT-3 to GPT-4, are going to be disappointed.

But steadily, quietly, Sober AI users and products are going to remake so much. If we dial back the hype, I bet we’ll get there faster.

-

That cringe demo lives in my head rent free. The giggling voice, complimenting the dude for, “rockin’ an OpenAI hoodie”… I wonder if the phrase “Manic Pixie Dreamgirl” is in the prompt. ↩

-

Oddly, Meta is the best behaved of the big players. It’s worth noting how they rolled out AI into their Wayfarer glasses incredibly slowly, always disclosed as a beta or experimental feature. ↩