In Pursuit of Quiet AI Features

An Empty Textbox Limits the Addressable Market

Last November, I suggested the GPT Store and Assistants was a hint that OpenAI had saturated their initial addressable market. In February of 2023, they had 100 million monthly active users, but an update in November boasted 100 million weekly active users. “Engagement is on fire, but acquisition may be stagnating.”

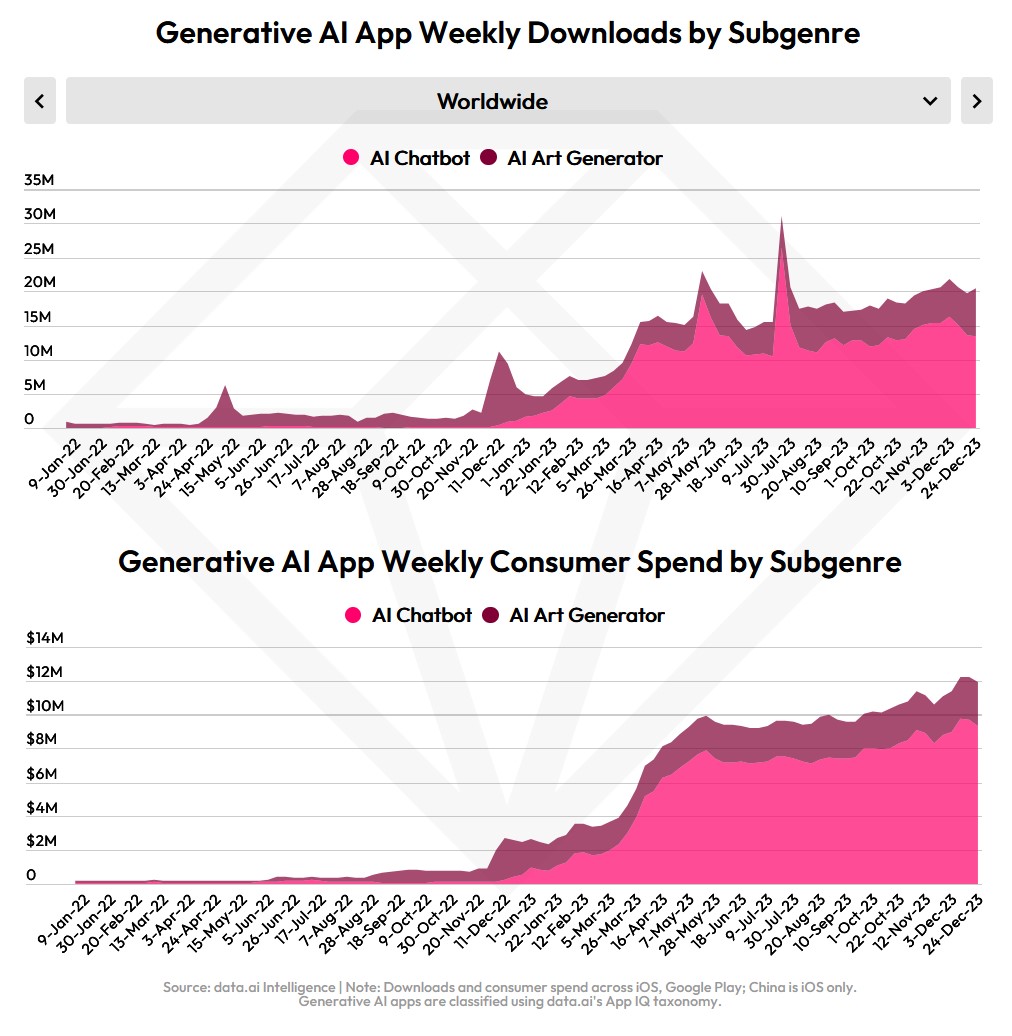

This week, data.ai released their “State of Mobile Report” and we can now verify this hypothesis: AI chat apps hockey-sticked upwards then flat-lined, despite steady revenue growth.

The problem is a UX issue: blank text fields are terrible interfaces for most users. Shortly after I published my November piece, a researcher at a leading AI image generation company reached out and confirmed my hunch. “We put people in front of a machine that will generate any image they can imagine. Yet, overwhelmingly, people just type things like, ‘A cat.’”

If we want to cross the chasm and bring AI to the masses, building better models isn’t going to be enough. We need to deploy those models smartly, in great interfaces, matching the capabilities of the models to the needs of our users.

Most AI Features Are Loud

These days, AI is so valued by investors, journalists, and users that it’s never deployed quietly. New features are promoted as “AI-powered”, personified as an “assistant”, and/or paired with the unofficial AI emoji✨. “AI” is a way to break through, stand out, and appear cutting edge. Why would you deploy AI quietly?

I can’t wait for the froth to fade. It’s preventing us from deploying AI thoughtfully, in small places, focused on solving specific problems for the user.

Here’s one example: emoji suggestions.

When “add an icon” to a new page in Notion, the app randomly selects an emoji to start you off. Here it’s chosen an elevator for my new page about AI writing.

We can make this better with minimal AI, specifically embeddings.

In just an hour, I whipped up a simple microservice that takes a string and returns a suggested emoji. It’s dead simple:

- On boot up, the web app loads CLIP and a list of curated emojis.

- The app generates an embedding for every emoji in the list and stores this in memory.

- We’re using FastAPI to listen for requests, generate an embedding for the input string, and return the emoji with the closest embedding.

All this fits within a small VM with 2GB of RAM – though I’m sure we could limbo under the 1GB limit with a little effort. You can read all the code here and try it out here.

Most of my time on this project was spent pruning boring emojis from the list and debugging deployment to Fly.io. The AI part was easy.

It’s worth revisiting the Notion example from above. So much of their AI work is heavily labeled as “AI”: copilots, page generation…all good things! But there are so many simple, quiet improvements they could make by sprinkling AI throughout.

Small Models Enable Quiet AI & Better UX

This use case, by the way, is why I am such a big believer in the power of small models with narrow expertise that are cheap and easy to run. Last week, I was asked about this excitement and why I wasn’t spending more time thinking about GPT-5 or whatever giant general model comes next. And this was a good chunk of my answer: when you have tiny models that cost barely anything to run and are tightly applied around specific user problems, you end up running them all the time, quietly enriching user experiences while staying out of the way.

When you start with giant, expensive, general models you come up with giant, expensive, generalized features. These features require user prompting – because they’re not cheap to run – so they’re constantly hidden behind explicit button presses and text fields. As a result, AI features aren’t well integrated into the app; they’re bolted-on services. And with that large price, they’re usually limited to paying users in specific contexts. And they’re loud: these are big features within the company and will be marketed as such. They can’t afford not to tell their investors, users, and the market that they’re an “AI” firm now.

As we gain expertise with smaller and cheaper models, large models come down in price, and the hype demands die down, I hope we see some proper AI integration guided by user needs and deployed quietly. When these capabilities land in UX designers’ toolkits, we’re going to enter a renaissance of design.