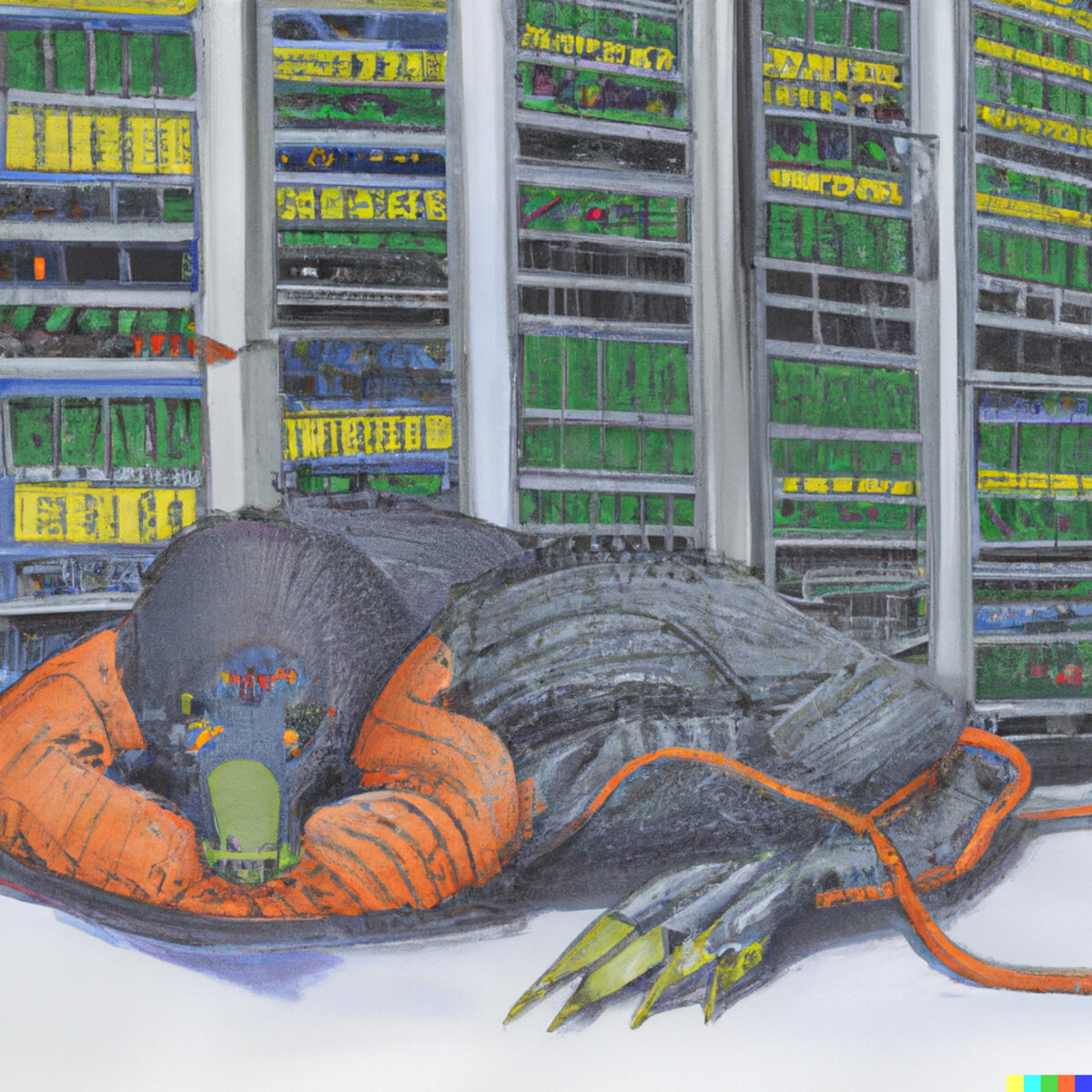

The Platypus In The Room

When trying to get your head around a new technology, it helps to focus on how it challenges existing categorizations, conventions, and rule sets. Internally, I’ve always called this exercise, “dealing with the platypus in the room.” Named after the category-defying animal; the duck-billed, venomous, semi-aquatic, egg-laying mammal.

There’s been plenty of platypus over the years in tech. Crypto. Data Science. Social Media. But AI is the biggest platypus I’ve ever seen.

(And in case you’re wondering: “platypus” is an accepted plural form of the animal’s name. Even its name defies expected rules!)

There’s been plenty of platypus over the years in tech. Crypto. Data Science. Social Media.

But AI is the biggest platypus I’ve ever seen.

Nearly every notable quality of AI and LLMs challenges our conventions, categories, and rulesets. Let’s list a few:

1. A model is made of data but might not be “data.”

The data used to train a GPT is clearly data. It’s scraped from books, documents, websites, forums, and more. It’s collected from contractors who provide new instruction sets. All of this training data is data. But when you feed these datasets into deep learning software, the data is distilled into a model made up of “parameters.” As we covered earlier, “each ‘parameter’ is a computed statistical relationship between a token and a given context.”

Training a model is a form of data compression. You’re reducing a tremendous volume of data into something that fits onto a commodity hard disk while retaining the contextual meaning expressed in the original dataset. But unlike the data compression we usually deal with (like zipping a folder to email it to a friend) you can’t extract a model back into its input dataset.

But you can ask a model to answer prompts and complete sentences so that it spits out data that it was fed. It’s not consistent. It’s sometimes completely wrong. But often enough it’s close enough to what came in. An AI model continues to represent the data even though it cannot be converted back into the original training set.

This is not only awkward to talk about, but it also comes with significant regulatory implications! If a model is considered data (and one could make a strong argument it is!) it is covered by all existing data laws & regulations we have on the books. This is, perhaps, one reason why OpenAI’s CEO Sam Altman has recently started framing AI as “just really advanced software.”

2. Model owners are responsible for generative output but cannot own it.

- Model output is not covered by Section 230.

- You cannot copyright output straight out of a model.

It’s looking like Section 230, the US’s safe harbor law regarding platforms and the content posted to them, won’t apply to the content spewed by models. To quote the Stanford Institute for Human-Centered Artificial Intelligence:

Legislators who helped write Section 230 in the United States stated that they do not believe generative AI like ChatGPT or GPT4 is covered by the law’s liability shield. Supreme Court Justice Neil Gorsuch suggested the same thing earlier in oral arguments for Gonzalez v. Google. If true, this means that companies would be liable for content generated by these models, potentially leading to an increasing amount of litigation against generative AI companies.

So if a model defames someone or spurs violence, the owners may be held liable in a way that Facebook or YouTube wouldn’t be in the same situation. But while model owners are liable for generative content, they can’t own it. The Federal Register has stated AI output untouched by human hands cannot be copyrighted. The Copyright Office recently rejected an attempt to copyright works produced by Midjourney, writing, “The fact that Midjourney’s specific output cannot be predicted by users makes Midjourney different for copyright purposes than other tools used by artists.”

You might be liable for your model’s output, while not truly owning it.

3. Models might be “software” but cannot be programmed.

Does Altman’s claim that AI models are “just really advanced software” hold up? Maybe.

Definitions of “software” are very broad. It’s a bucket term that is perhaps best expressed as: everything computers do that isn’t hardware. Which is vast enough to border on meaningless. I think we can have a more interesting discussion about whether or not an AI model is a “computer program.” Because our current AIs, LLMs, can’t be programmed, they can only be trained.

It’s neat that we ‘program’ LLMs with natural language prompts, but it’s worryingly imprecise. We’re unable to modify LLMs by reaching in and tweaking parameters, removing a bit of training data’s contribution or ‘knowledge’ of a specific subject. Our only way to materially modify models is to…add more training data (via static data or reinforcement learning from human feedback (RLHF)).

This not only raises questions about control of model output (see above for the associated risks) but also asks raises the question: “Who built the model?” Was it the data that trained the model? Research released last week underscores that emergent capabilities cannot occur without massive amounts of data. We can’t yet substitute clever programming for data quantity…and we’ve been trying for years.

AI strains against our existing definitions, taxonomies, and rulesets – it’s the platypus in the room. Trying to fit AI into our existing regulations and development patterns is shortsighted and, at best, only a temporary fix.

We need new frameworks to accommodate this platypus.