How Model Use Has Changed in 2025

From ‘Naked’ Model Endpoints to Tool-Using, Reasoning Environment Endpoints

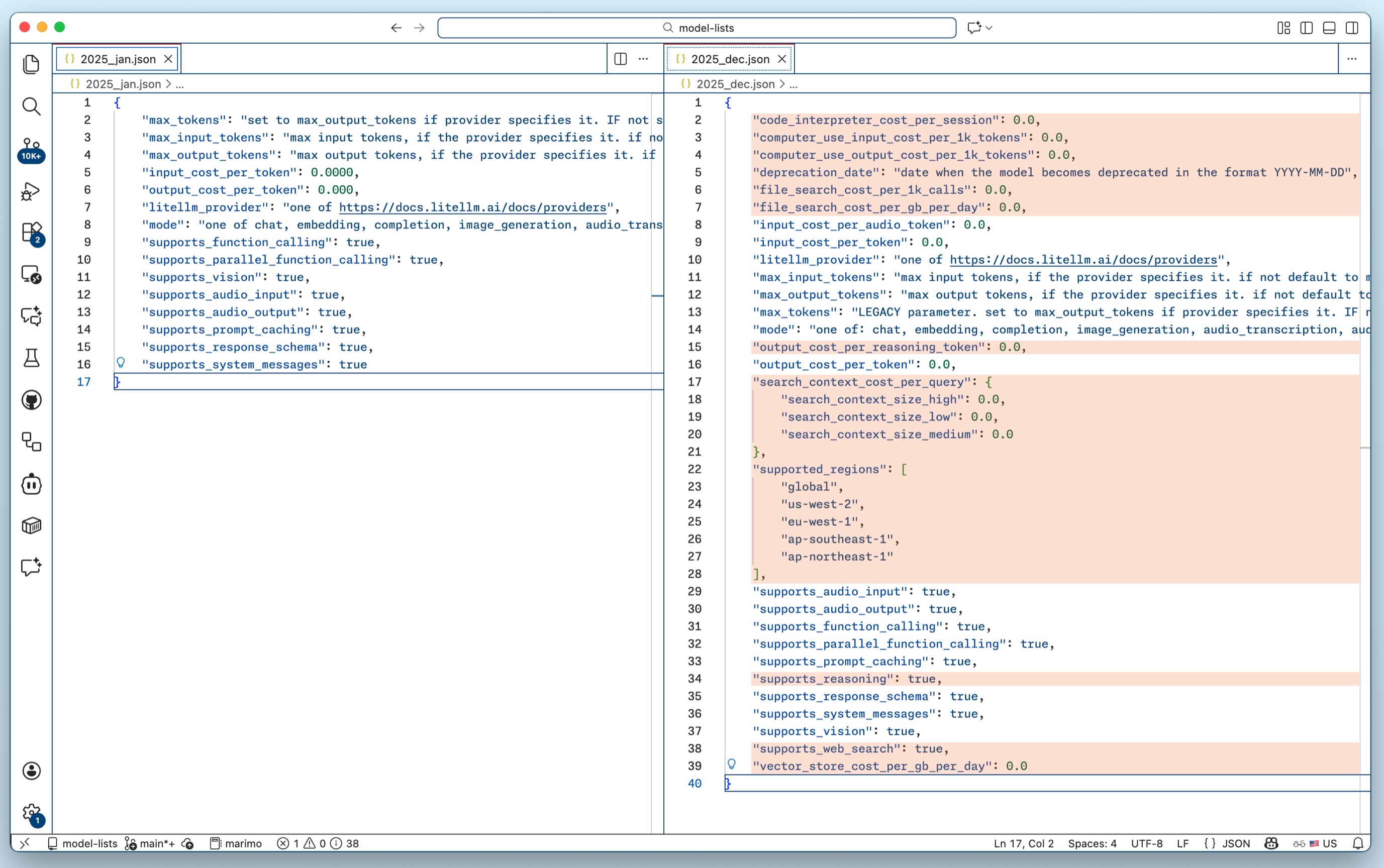

I was poking around LiteLLM’s Github repository and stumbled upon an interesting file. model_prices_and_context_window.json is a registry of all the models and inference providers you can call with LiteLLM. This is the core value of LiteLLM, wrapping this diverse array of models behind a consistent yet capable API, allowing applied AI builders to swap out models and providers without a major code rewrite.

This registry file is impressive, and well communicates the value of LiteLLM. It’s over 30,000 lines detailing over 2,000 model and provider combinations. At the top of the JSON file, LiteLLM provides a sample_spec, their schema for the information they store for each model. Curious, I poked into the repository’s commit history to see how this schema has evolved over the months.

And boy if this isn’t the story of LLMs in 2025:

On the left is the schema on January 1st, 2025. On the right is the schema today. The orange lines were added in 2025. The schema has doubled in size, as more and more tools and logic has been embedded in models and their providers. We aren’t just asking for text completion or chat, a good chunk of us are now hitting a single endpoint that can execute code, use a computer, manipulate files, and search the web. These types of calls are being made to an appliance, not a function, complete with its own environment to complete a task.

2025 may not have been the year of the agent, but perhaps it was the year of the tool.

Now, of course, this isn’t everyone. Such an appliance is essentially a blackbox that is difficult to eek reliability out of, if your agent or application is struggling. We still have and use ‘naked’ inference calls all the time.

But for human-in-the-loop chat apps, the surface area of what happens behind a model call is growing in size and structure.