FLUX.1-Krea & the Rise of Opinionated Models

AI-generated images have a general look to them. Shiny, bright, waxy-skin, and over-use of bokeh. From Midjourney to Gemini to OpenAI, the AI Look is consistent. Enthusiasts and professionals wrestle with prompts and even fine-tune these models to tamp down the AI smell, with varying degrees of success.

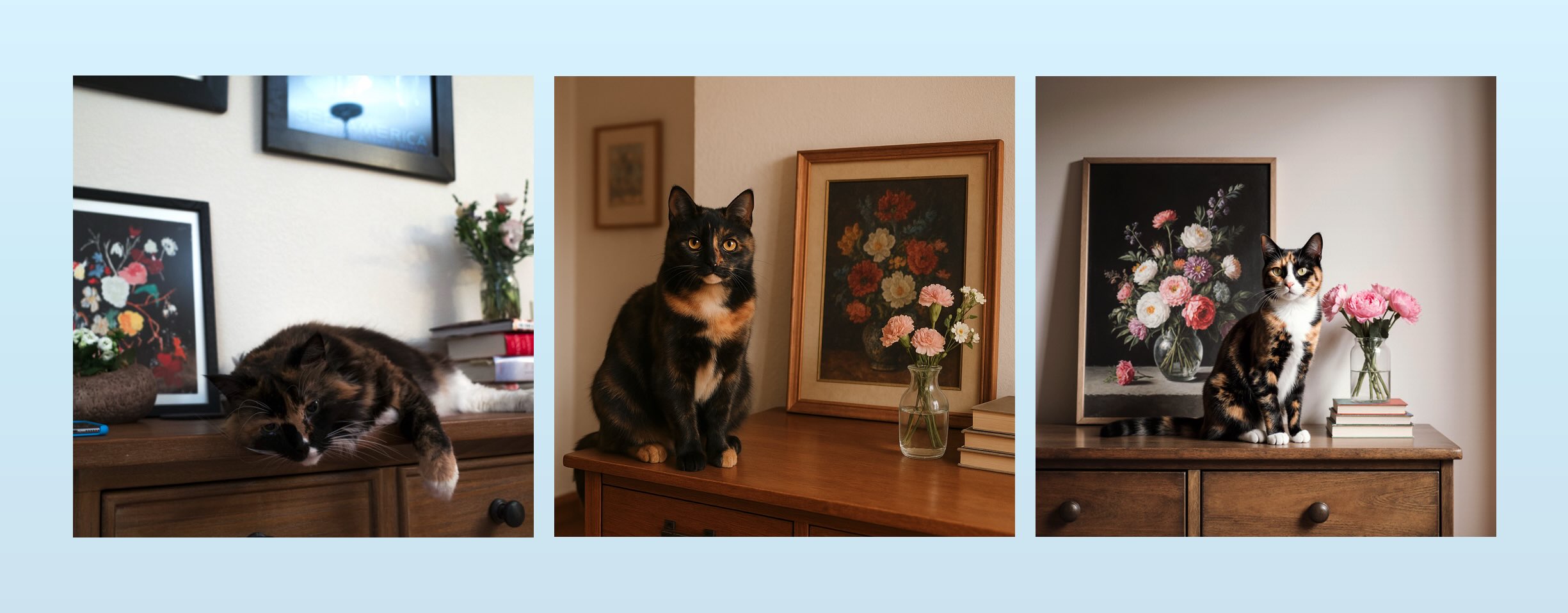

Examples of the "AI Look", provided by Krea in their technical paper.

Last week, Krea launched an open model, FLUX.1-Krea, that’s built to avoid the “AI Look”. Their writeup is tremendous: it details the problem, suggests root causes, and details how they overcame the challenge – all while providing necessary context. You should read the whole thing, but here’s the recap:

Model builders have been mostly focused on correctness, not aesthetics. Researchers have been overly focused on the extra fingers problem:

People often focus on how “smart” a model is. We often see users testing complex prompts. Can it make a horse ride an astronaut? Does it fill up the wine glass to the brim? Can it render text properly? Over the years, we have devised various benchmarks to formalize these questions into concrete metrics. However, in this pursuit of technical capabilities and benchmark optimization, the messy genuine look, stylistic diversity, and creative blend of early image models took a backseat.

Model builders rely on the same handful of aesthetic evaluators. Just as LLMs tend to optimize for the same handful of benchmarks, image models evaluate their results against a handful of aesthetic evaluators. And just like LLM benchmarks, these metrics produce common results:

We find that LAION Aesthetics — a model commonly used to obtain high quality training images — to be highly biased towards depicting women, blurry backgrounds, overly soft textures, and bright images. While these aesthetic scorers and image quality filters are useful for filtering out bad images, relying on these models to obtain high quality training images adds implicit biases to the model’s priors.

Model builders combine multiple aesthetics into a single unappealing average. No one likes art designed by committee (I’d argue it’s not “art” at all). And yet, this is what we get when we bring all our post-training to bear on a single image model:

It’s our belief that a model that has been fine-tuned on “global” user preference is suboptimal. For goals like text rendering, anatomy, structure, and prompt adherence where there’s an objective ground truth, data diversity and scale are helpful. However, for subjective goals such as aesthetics, it’s almost adversarial to mix different aesthetic tastes together.

For example:

Consider a case where one user loves high fashion photography and another user is into minimalist style drawings. Given a focused annotation from the respective users, it would be easy to align the model to excel at respective styles. But, when you merge the two distributions together, we get a marginal preference distribution which is not biased enough to make either party happy.

Users fight the “AI Look” with heavy prompting and even fine-tuning. But this isn’t a great solution. For one, only a small group of enthusiasts and professionals will invest in the learning and work required.

Testing FLUX.1-Krea

After several rounds of experimentation, I arrived at an adequate process for comparing image models.

I initially thought giving models very vague prompts – “Create a portrait of a beautiful person” – would best illustrate their biases and preferences. But this failed: allowing the model to infer basic details about subjects and context highlighted informational differences, not aesthetic ones. The opposite approach, however, turned out to be fruitful: by prompting them with specific descriptions of images, it’s easier to note the slight differences in aesthetic preferences. The things that are hardest to define aren’t nailed down, while the subjects, backgrounds, and other elements are well defined.

To compare FLUX.1-Krea to OpenAI’s GPT-4.1 (likely the most used today), I provided a photograph to Claude and prompted it to, “Review the attached image and write a one-paragraph prompt suitable for feeding into an image generation model to evoke an image similar to the one attached.” The resulting prompt was then fed into each image model.

From left to right: the original photo, GPT-4.1's generation, and FLUX.1-Krea's generation.

When presented with the photo on the left, Claude Sonnet 4.0 wrote:

A tortoiseshell cat with black, brown, and white markings sitting on a wooden cabinet or dresser in a cozy home interior. The wooden furniture has visible drawer handles and holds a framed floral still life painting with vibrant flowers against a dark background, a glass vase containing fresh pink and white flowers, and a small stack of books. The scene is set against a light-colored wall with another framed artwork visible in the background, captured in soft, natural lighting that creates a warm, lived-in domestic atmosphere.

This was given to both image generation models, resulting in the images on the right.

Already, we can see GPT-4.1 hewing close to our prompt’s language. “Warm” was certainly embraced and the frame in the background is present. GPT-4.1 is unquestionably more correct. But Krea’s is, to my eyes, much more preferable.

We can immediately see what Krea’s authors meant by optimizing for correctness. In the next example, we get a clear illustration of the “AI Look” they’re avoiding:

From left to right: the original photo, GPT-4.1's generation, and FLUX.1-Krea's generation.

This pic of me – from over a decade ago, I should add – was described by Claude as:

A friendly man with glasses and a reddish-brown beard wearing a black t-shirt, holding a canvas tote bag, standing outdoors against a lush green background of trees and foliage, shot with shallow depth of field creating a soft bokeh effect, natural daylight lighting, casual and approachable expression, looking directly at the camera, photorealistic portrait style with warm and inviting atmosphere.

And there’s the “AI Look” Krea was talking about. GPT-4.1’s take on this prompt hits all the elements: overly shiny, smooth skin, tons of bokeh. The image is on its way out of the uncanny valley, but not quite to the top.

But mission accomplished for Krea! The “AI Look” is nowhere to be found. I suspect the contrast here is especially pronounced since Krea likely focused on portraiture and human subjects when mitigating the “AI Look”.

Finally, let’s try an architectural/street scene:

From left to right: the original photo, GPT-4.1's generation, and FLUX.1-Krea's generation.

Claude writes:

A person wearing a bright red cape with a yellow emblem walks toward an elevator entrance in a modern architectural setting. The building features a striking geometric design with angular metallic gray panels contrasting against soft pink and salmon-colored sections. The contemporary structure displays clean lines, mixed materials including metal cladding and glass elements, with small integrated planters adding touches of greenery. The scene is captured in natural daylight, creating an intriguing juxtaposition between the heroic, caped figure and the everyday institutional elevator entrance against the backdrop of sleek, minimalist commercial architecture.

GPT-4.1 remains “shiny” here, with Krea generating more of a snapshot, street vibe. The too-perfect “AI Look” is back, but avoided by Krea, despite both models generating almost exactly the same composition.

The Future of Qualitative AI Tasks is Opinionated Models

We’ve talked plenty about how recent advancements in AI models are a bit lopsided. Our reliance on reinforcement learning has led to big leaps in verifiable skills like math and coding; fields where work can be tested for correctness. But qualitative tasks, like creative writing, have stagnated. Krea does an admirable job illustrating why this is the case. Aesthetic preferences can’t easily be evaluated with general metrics and combining multiple aesthetic domains into a single model can yield average results.

Now that they’re solved their more embarrassing problems, image generation models are likely going to make aesthetic choices to stand out, rather than attempt to build one-size-fits-all models. Krea likely isn’t the first to lean in here; I suspect OpenAI has either trained its model for some specific preferences or is handling this in ChatGPT’s system prompts. There’s probably a good reason the four-panel comic style is remarkably consistent across prompts.

As the cost of post training continues to go down, I expect we’ll see an increased number of opinionated imagery models. It makes sense for in-house animation studios or production houses to build their own opinionated models for their own exclusive use. Further, public model builders can increase the quality of their images by applying sensible aesthetic choices.

This trend isn’t limited to image generation applications. Qualitative preferences are being encoded in text generation models and chatbot applications, either through system prompts (looking at you, Grok) or post training. As models figure out how to improve their qualitative skills, I suspect we’ll see increased specialization.

I’m excited for this era, if only because I’m continually frustrated by the presentation of AI as an objective machine without bias. Our models reflect the data we provide them with and the design choices and metrics we apply during training. Opinionated models make this quality explicit.