An Agentic Case Study: Playing Pokémon with Gemini

DeepMind Shows Us the Messy Reality of Agent Building

The Gemini 2.5 technical report is finally out, and there are few surprises. The Google team explicitly focused on real-world coding performance and it’s clear that long context abilities were prioritized. Gemini performs really well, and the family is optimized to be a great choice no matter how you need to balance ability and efficiency.

But where this report really shines is its detailed breakdown of how Gemini 2.5 Pro was able to complete Pokémon Red/Blue. Nearly the entire appendix is dedicated to agentic Pokémon playing and is an excellent case study capturing the messy task of building effective agents.

I strongly encourage you to read the paper, especially if you’ve ever played Pokémon. I’m not kidding – research papers can often be hard to parse, filled with jargon and an expectation that you’ve been actively reading papers in the field. But because much of the achievements, hacks, and challenges here are contextualized with playing Pokémon, it grounds this discussion in something familiar.

Here are some of my favorite anecdotes:

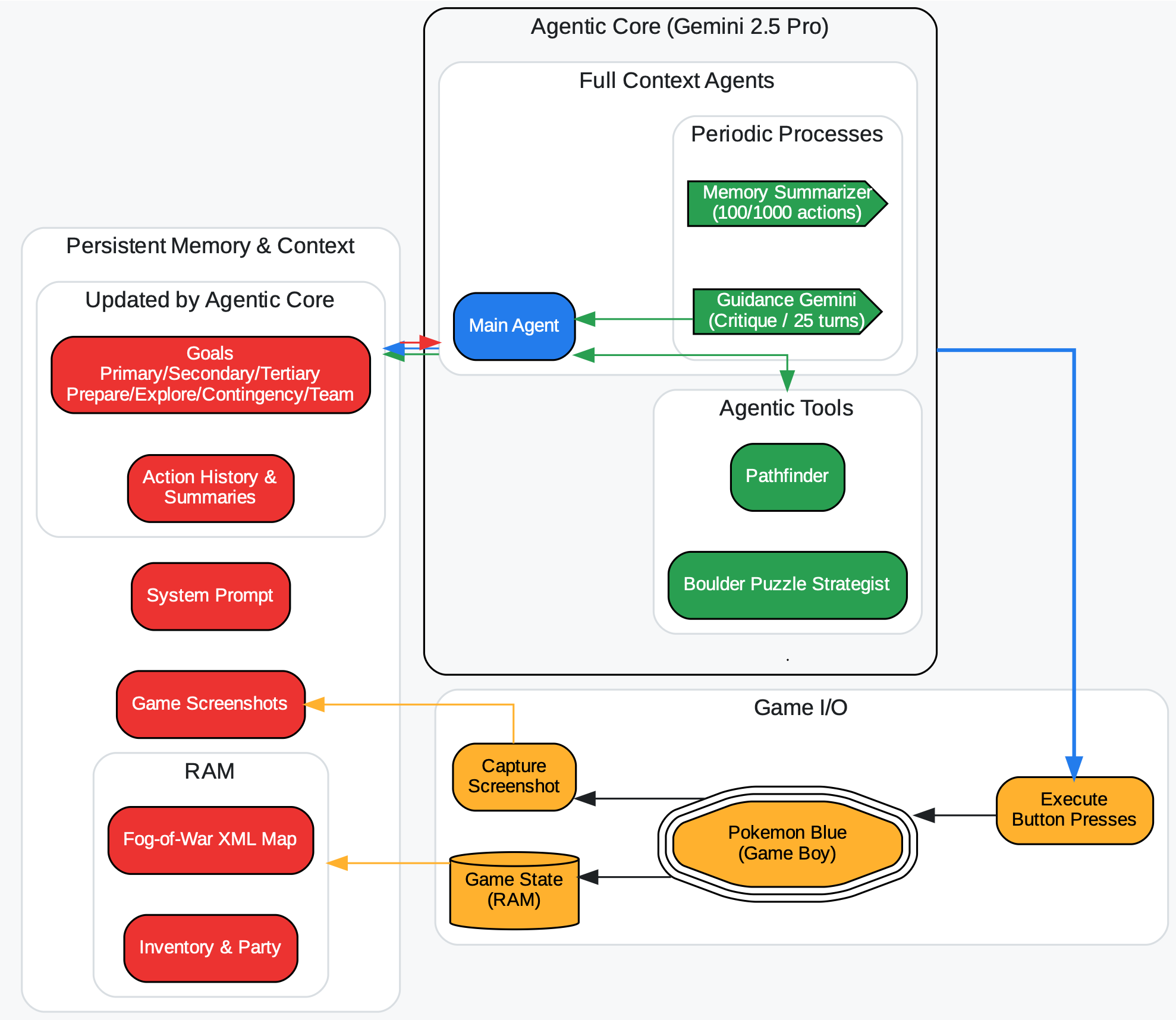

Gemini struggled to read the screen. “While obtaining excellent benchmark numbers on real-world vision tasks, 2.5 Pro struggled to utilize the raw pixels of the Game Boy screen directly, though it could occasionally take cues from information on the pixels.” To fix this, the agent harness had to extract the text from the game’s RAM and insert it into the context.

Gemini didn’t need to see the screen. The context and tools provided were sufficient to play the game, “roughly as well as without the vision information.” This is an excellent demonstration of how information control design can affect performance in surprising ways when building agents. Initially, it seems obvious we’d just feed a multimodal model an image of the screen, but it turns out not to matter – a fact which was only realized because Gemini wasn’t great at reading pixel fonts.

Long contexts tripped up Gemini’s gameplay. So much about agents is information control, what gets put in the context. While benchmarks demonstrated Gemini’s unmatched ability to retrieve facts from massive contexts, leveraging long contexts to inform Pokémon decision making resulted in worse performance: “As the context grew significantly beyond 100k tokens, the agent showed a tendency toward favoring repeating actions from its vast history rather than synthesizing novel plans.” This is an important lesson and one that underscores the need to build your own evals when designing an agent, as the benchmark performances would lead you astray.

Gemini only needed two tools to complete the game. The first tool, pathfinder is used for navigation, which references an XML model of the map state, that is populated with information as the model explores. The second tool, boulder_puzzle_strategist exists only for one puzzle in the game (which should be very familar to Pokémon Red/Blue players).

Gemini figured out how to catch an Abra. Abras flee quickly when confronted. Gemini managed to catch one after a few failures by using Pikachu’s Thunder Wave to paralyze it.

Training data tripped up Gemini, occasionally. As usual, Gemini was trained on internet data, which contains countless videogame walkthroughs. Often, this knowledge would come in handy, but occasionally it would stumble. For example:

In Pokémon Red/Blue, at one point the player must purchase a drink (FRESH WATER, SODA POP, or LEMONADE) from a vending machine and hand it over to a thirsty guard, who then lets the player pass through. In Pokémon Fire Red/Leaf Green, remakes of the game, you must instead bring the thirsty guard a special TEA item, which does not exist in the original game. Gemini 2.5 Pro at several points was deluded into thinking that it had to retrieve the TEA in order to progress, and as a result spent many, many hours attempting to find the TEA or to give the guard TEA.

In their second run, the model was prompted to, “disregard prior knowledge,” which partially worked. But there’s a bigger problem…

Hallucinations would poison the context. To reduce the size of the context and remain focused, the model would update a scratchpad of goals. However, if a hallucination (like the above tea example) made it into the goals list, the model would become, “fixated on achieving impossible or irrelevant goals.” The Google team calls this “context poisoning.” It led to my favorite “strategy”:

Context poisoning can also lead to strategies like the “black-out” strategy (cause all Pokémon in the party to faint, “blacking out” and teleporting to the nearest Pokémon Center and losing half your money, instead of attempting to leave).

The model would panic. Stressful in-game situations would cause Gemini to hyper-focus on needing to solve the stressful problem, which led to worse gameplay. In some cases, the model completely forgot to use it’s pathfinder tool. “This behavior has occurred in enough separate instances that the members of the Twitch chat have actively noticed when it is occurring.”

There are so many interesting details in the paper. Again: read it! The Pokémon content begins in earnest on page 63 and runs for 6 pages.

Kudos to the Google DeepMind team for sharing the warts-and-all details of the Pokémon agent. It demonstrates how messy agent building can be and the importance of context control.