Beware the Cyren's Song

Sometimes you just want to put a word on something. Crystalize it into a coherent shape so we can talk about it better. Today that word is “Cyren.”

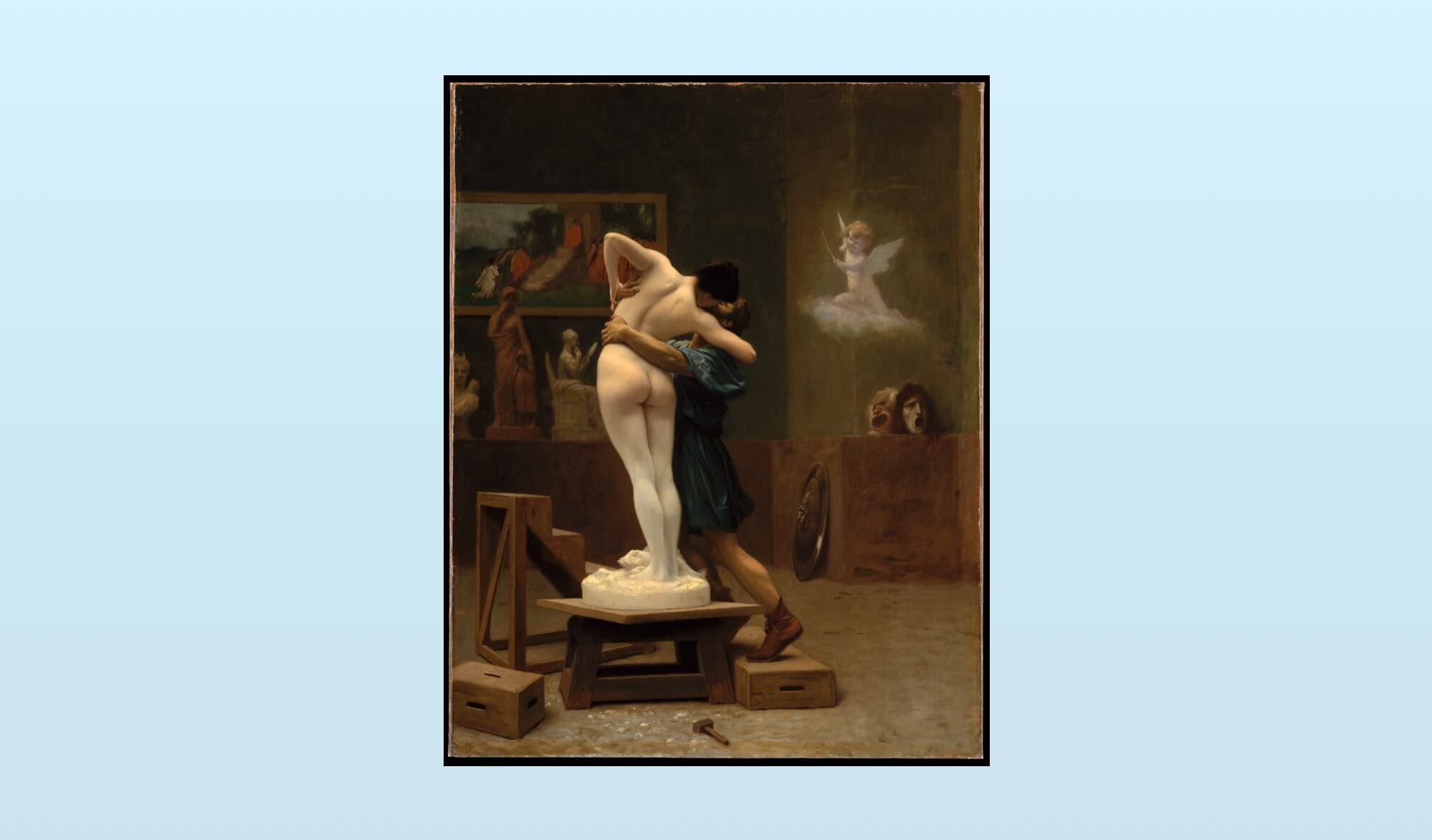

A cyren is a bot, masquerading as a human, designed to engage you in a relationship for an ulterior reason. Cyrens may be used to generate subscription revenue, advertising impressions, spread disinformation, or just waste your time. These bots aren’t “honeypots”, because there isn’t an actual human. They’re “cyrens”, portmanteau of “cyber” and of “siren,” the mythical creatures with enchanting voices and irresistible songs that lured passing sailors to their doom.

Giving cyrens a name lets us better discuss and consider them, both their benefits and dangers. Recent developments suggest we’ll be needing the help.

The Cyrens of Ashley Madison

Perhaps the best example of a cyren was discovered in 2015 after a hacking group stole and leaked data from the affair-seeker social network, Ashley Madison. Annalee Newitz, digging beyond the initial doxxing of millions of would-be cheaters, found evidence that a good chunk of female accounts on the site were bots, dubbed, “engagers.”

Revisiting the incident last July, Newitz wrote:

What I discovered was a bizarre scam – though it was far more like Westworld than US reality show Cheaters. The company had systematically created an army of fake women, mostly very simple chatbots called engagers, who would flirt with men to lure them into paying for a subscription to the site.

As we poured over the code, we found that, although there were a few human women on the site, more than 11 million interactions logged in the database were between human men and female bots. And the men had to pay for every single message they sent. For most of their millions of users, Ashley Madison affairs were entirely a fantasy built out of threadbare chatbot pick-up lines like “how r u?” or “whats up?”

In 2024, Newitz continues, the Ashley Madison “engager” story foreshadowed our AI present:

Nine years later, this could describe any number of social media sites that have become swamped with bots and AI-generated absurdity – and charge you for the privilege of interacting with techno-phantoms. Currently, Facebook is trying to figure out how to deal with millions of fake images generated by AI, while Google’s AI bot Overviews is telling users to glue cheese to pizza. The problem is, human beings are interacting with these AI images and suggestions, in some cases imagining they are engaging with real people.

Now, these examples aren’t cyrens. The Facebook example is slop, AI-generated content shotgunned onto Facebook, Instagram, and other platforms for farming engagement. Google’s pizza-glue incident was the result of badly designed AI features, either hallucinating or grabbing bad info and repackaging it.

But the cyrens are coming.

Cyrens as a Service

Here’s Christina Criddle and Hannah Murphy, writing for the Financial Times last Friday:

[Meta] is rolling out a range of AI products, including one that helps users create AI characters on Instagram and Facebook, as it battles with rival tech groups to attract and retain a younger audience.

“We expect these AIs to actually, over time, exist on our platforms, kind of in the same way that accounts do,” said Connor Hayes, vice-president of product for generative AI at Meta. “They’ll have bios and profile pictures and be able to generate and share content powered by AI on the platform . . . that’s where we see all of this going,” he added. Hayes said a “priority” for Meta over the next two years was to make its apps “more entertaining and engaging”, which included considering how to make the interaction with AI more social.

Over at the Intelligencer, John Herrman writes:

[Meta has] surely noticed that its platforms are already filling with AI slop anyway and that some of this slop was creating a lot of engagement, meaning that, in the ways that matter most to Meta, it’s not really slop at all. The company also clearly noticed the rise of Character.ai, the popular — but possibly doomed — lawsuit magnet of an app in which young users create and chat and act out fictional scenarios with AI characters.

For those unfamiliar, Character.ai is an example of cyrens as a service – a field that also includes apps like Replika. These apps are designed to entertain, teach, or simply keep you company.

In an interview with Axios, Eugenia Kuyda – the founder of Luka, the company behind Replika – said, “It doesn’t matter if an AI is real or not, the feelings are real.” Luka and a research team at Stanford even published a study in Nature finding a decrease in suicidal ideation among users of LLM-powered chatbots. Though I’m not sure Luka cites this paper much today, given in the ensuing months Character.ai was sued by a mother whose son died by suicide following a relationship with a Daenerys Targaryen.

The cyrens of Character.AI and Replika aren’t presented as real humans, but that’s not a requirement for the definition. Their cyrens still engage millions of people for hours a day, encouraging subscriptions to premium tiers.

Which is probably why Meta is rolling out their own.

Lash Yourself to the Mast

I don’t think chatbots are inherently bad, so long as users know they’re talking to a bot and they don’t begin to replace human interaction. When those things start to occur, I worry1.

I worry Meta – and others – will find it’s easier to generate conversation partners than it is to discover conversation partners.

We don’t need more reasons to avoid interactions with people different from us.

And while a disclosed Facebook cyren boosting stories with synthesized commentary is much better than slop (it reminds me of an automated DJ on a radio station), the Character.ai cases demonstrate both the dangers and the fine lines we’re playing with here. Humans are hard-wired to see humans everywhere. Even when we disclose that a cyren is a cyren, we fall for the façade. As Kuyda said, “It doesn’t matter if an AI is real or not, the feelings are real.”

I believe chatbots can help ease loneliness. I also believe they can increase isolation.

I do not believe we can perfectly balance this dynamic even if we could perfectly control the output of LLMs (remember pizza glue?). A safer course is to err towards worse, imperfect cyrens, rather than pursue incredibly convincing, aligned ones.

I don’t worry about superintelligent AGI’s taking over the world. I worry about bots convincing people they’re having an emotional connection when they’re not.