The Rise of Audio AR

The Right Information, At The Right Time, Without Looking

For a bit there, virtual reality (VR) and augmented reality (AR) were being heralded as the next big thing.

Meta went all-in, spending billions on Oculus R&D annually and eventually rebranding the company to signal their focus. Microsoft’s Hololens had plenty of buzz, Magic Leap was the darling of the tech press and investors, Sony shipped millions of PSVR units, and Apple was rumored to be working on something big.

But the hype never materialized.

Oculus has yet to find its killer app and no one’s quite sure what to do with Apple’s Vision Pro. ChatGPT arrived and AI quickly became the next big thing, bumping VR/AR to the back of the line.

Even Meta seems to talk more about LLaMA than AR these days.

But quietly the pieces have been coming together for a different kind of AR: Audio Augmented Reality (AAR). Thanks to the rise of smart headphones, improved voice recognition and generation, AI language models, and better contextual data, AAR is set to slowly but surely seep into our daily lives. AAR will deliver the right information, at the right time without requiring us to change our focus. It’s a monumental shift in how we interact with technology, and it’s coming sooner than you think.

The Pioneers of Audio AR

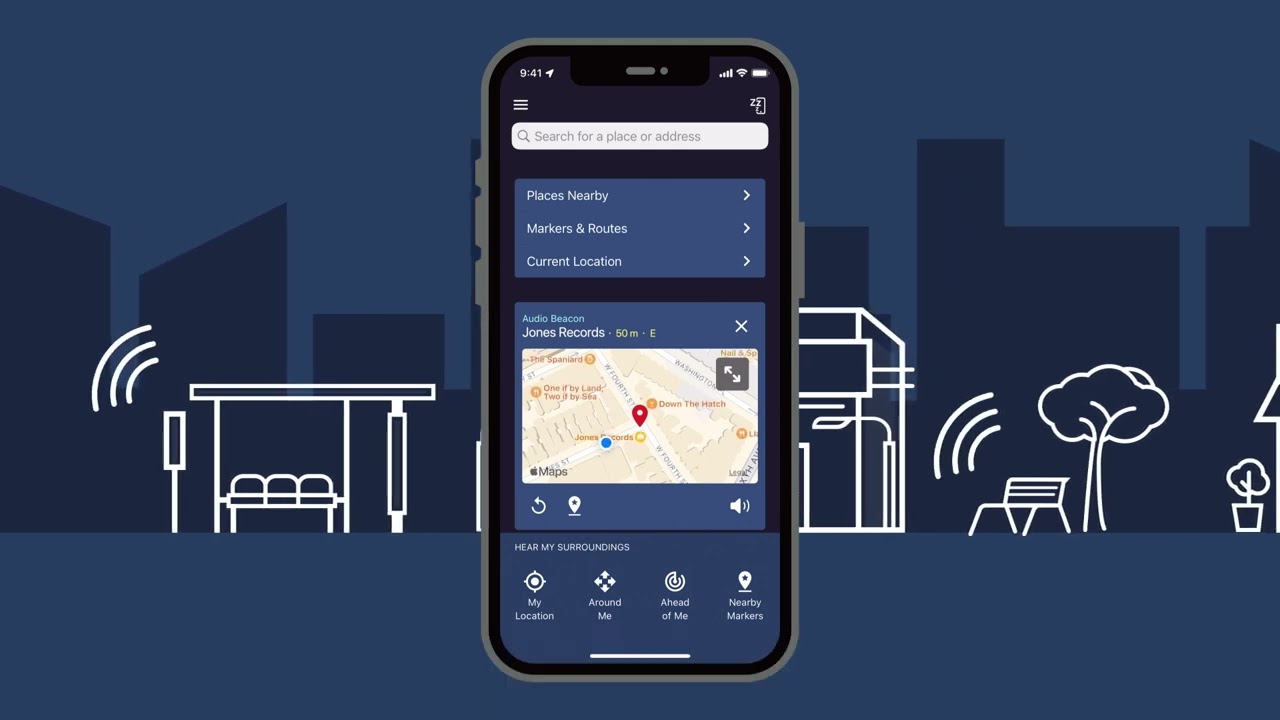

The concept of Audio AR isn’t entirely new. Microsoft’s Soundscape and FourSquare’s MarsBot were two early attempts at delivering an Audio AR experience.

Microsoft’s Soundscape was developed primarily as an accessibility tool for the visually impaired. As users walked through a city, spatial audio pings would notify them of surrounding points of interest, from laundromats to restaurants. It demonstrated the potential of using spatial audio and location-based alerts to augment reality. However, in dense urban environments, it created an overwhelming cacophony of sounds. And in suburban areas, it was too sparse to be very useful.

FourSquare’s MarsBot took a more personalized, data-driven approach. It leveraged FourSquare’s extensive database of places, their ratings, and reviews to predict and recommend locations a user might find interesting. But MarsBot only thrived in a high-density environment like New York City. It was an experiment – one with great thinking about what it means to be an audio-first AR app – that never really landed. It struggled to be truly passive, demanding too much user attention to filter through irrelevant alerts.

These apps, and experiments like them, broke important ground and showed Audio AR could be used for more than simple turn-by-turn navigation. Soundscape showed the power of spatial audio and location-based notifications for wayfinding. MarsBot pioneered using personalized data and experimented with more humane notifications. But it seems only a very specific set of nerds like myself took notice. Their ideas lay dormant for a few years, waiting for the right enabling technologies to arrive.

The Arrival of Enabling Technologies

Several key technologies have emerged in recent years, enabling a new generation of Audio AR applications:

-

Smart wireless headphones like Apple’s AirPods have seen massive adoption, putting an always-available, hands-free audio interface in many people’s ears. They deliver high-quality sound and give developers access to a range of controls and sensor data, from volume to head movement to biometrics. Built-in microphones and simple controls make it easy to trigger assistants and audio apps.

-

Voice recognition has made huge leaps thanks to improved noise cancellation, language models, and edge computing. Talking to virtual assistants now feels almost as natural as conversing with a person. You can speak to them casually without having to modify your speech. Speech-to-text is now fast and accurate, without the need for a network connection.

-

Text-to-speech engines can now generate human-like voices with realistic inflection and intonation. The latest models are hard to distinguish from a real human, especially in short exchanges. This allows for the fluid generation of dynamic content.

-

Large language models can engage in open-ended conversation, answer follow-up questions, and even take actions on the user’s behalf. They translate imperfect and inconsistent voice commands into programmatic actions enabling a more complex interface without a screen. Further, LLMs can be used as decision engines for what content to surface and when.

-

Rich location and context data allow Audio AR apps to deeply understand the user’s environment and current situation. This includes detailed place data, real-time weather and traffic, calendar and messaging data, and more. It’s impossible to serve the right bit of information at precisely the right time without this data.

Putting it all together, we now can deliver highly contextual and personalized audio content and interactions to users as they go about their day. The challenge now moves from building enabling technologies to building the UX.

The Problem to Be Solved

The core UX challenge for Audio AR applications is delivering the right information at the right time.

Too much irrelevant information becomes overwhelming. Too little and the experience isn’t very useful. The sweet spot is frustratingly narrow.

To illustrate this challenge, let’s first establish a starting point with an Audio AR app and use case that works well: audio tours.

A few weeks ago, I traveled to Ghent, Belgium for an Overture Maps Foundation meeting (speaking of improved access to location data…). Having some time to explore the first morning, I checked out an audio tour of the city on VoiceMap. I have no idea how good other tours are, but this tour was perfection. So good, it suggested the potential of Audio AR.

Voice Map’s tours work by using your phone’s GPS to trigger audio descriptions tied to specific waypoints. As you walk, the narrator gently directs you where to go next, providing landmarks where the next segment of the tour will pick up. Voice Map helps creators time their content to specific route segments, (in this case) achieving a relaxed yet seamless experience as you stroll. If you pause to duck into a cafe to sit for a moment, the next cue isn’t triggered and the audio pauses. Walk outside and the content picks back up. The interface was my headphones and my location, that’s it.

But, to borrow a term from game design, I was on rails.

The content and notifications were perfect because I’d purchased and started the tour. Voice App knew where I was going and the information I wanted. For a small slice of Ghent, it was the perfect Audio AR experience. I started wondering what it would take to cover the entire city in content and cues, enabling me to walk wherever I wanted. But even then, I’d turn the tour off if I was taking a path I’d already walked or if I just didn’t want to be disturbed for a while. Even with content coverage, cues would get tricky quickly and require more than just my current location. Time of day, my calendar, my past location history – all of this would figure into how the content should be surfaced.

Creating an always-on, ambient experience that proactively surfaces relevant information is quite the challenge.

After the tour, I looked up MarsBot to see if it was still available. It’s not, but Denis Crowley, FourSquare’s founder and MarBot’s creator, is taking another bite at the apple with a new company called BeeBot. BeeBot leverages LLMs to help with content creation and determining when to push an audio notification. Crowley was recently interviewed on Alex Kantrowitz’s Big Technology Podcast.

Like MarsBot and SoundScape, Crowley’s approach with BeeBot is to “push” content to the user. This is undoubtedly the hard mode of Audio AR. Not only do you have to figure out what content to push, but you have to figure out when to push it.

For their entry into Audio AR, Meta has taken the opposite approach.

Photo by Phil Nickinson for Digital Trends

Photo by Phil Nickinson for Digital Trends

A Glimpse of the Future

Meta’s Wayfarer sunglasses are perhaps the best Audio AR product currently on the market. They combine the enabling technologies – smart headphones, voice recognition and synthesis, LLMs, and context data – into a single, cohesive product.

Despite this, they’re kinda flying under the radar. Before I had my pair, I knew one person who used them…and they work at Meta. If you, like me, aren’t familiar with Meta’s Wayfarers here’s a quick rundown. They look nearly identical to Ray Ban’s iconic Wayfarer glasses, with a bit more heft on their arms. They have built-in speakers that do a great job of delivering spatial audio so only you can hear it; there’s no bass, but it’s great for spoken word. They have cameras that let you take pictures and short videos of your POV. And if you join the beta program, you can access Meta’s voice multi-model AI. If you want to know more about what you’re looking at, just say “Hey Meta,” and ask.

To be sure, there are rough edges. The assistant refuses to answer many types of questions and is occasionally wrong. The interface is invisible, forcing users to result to trial and error.

Despite this, Meta’s Wayfarers succeed because they use a “pull” UX model: users have to explicitly ask for information. The guesswork of knowing when to push content is eliminated; a welcome decision for a product whose rough edges are still very apparent (hence the beta program). Contextual data is still used to inform the assistant’s response – both with the user’s location and the camera’s view – but the user controls the timing.

And when everything clicks, Meta’s Wayfarers are a glimpse of the future.

Focusing on a Specific Context Sessions

There’s a hybrid approach to the UX worth considering: focusing on a specific context in a session that a user proactively engages. During this period the app can push content with confidence that it’s relavent. This design pattern is put to excellent use by Apple’s Fitness app.

If you keep an AirPod in your ear while using the Fitness app, you’ll receive pacing information, heart rate feedback, and more. While cycling, the Fitness app will pipe notifications into one ear, letting me keep my phone in my bag. By engaging a specific context, the push notifications are all relevant. The surface area of the UX is limited, allowing for a more controlled experience.

Beyond fitness, this model could work well for a host of contexts where the user opts into a context-specific session. Voice Maps could blanket a city with generated content and allow users to engage an exploration mode, inviting the app to push content frequently. A cooking app could push instructions and set timers, following the start of a recipe session. A shopping app could provide product rankings and reviews as one picks up items in store, once a shopping session begins.

Focusing on actively engaged contexts is a great way to chip away at Audio AR’s problem to be solved. The app gets to push with abandon, but only when the user turns it on. Developers get to focus on specific contextual data to obtain and consider and limited set of notification triggers.

What We Need for the Mainstream

To fully realize the potential of Audio AR we need to build the tools and obtain the data needed to turn our context into an invisible interface.

In the three examples discussed above – Voice Map, Meta’s Wayfarers, and Apple Fitness – we both see the potential of and challenges facing Audio AR. Each product is awkward in its own way, but their stumbles often have to do with access to contextual data.

If you go off the rails of a Voice Map tour, the app has nothing to say. Its knowledge of the world is limited to the path prepared by a tour creator; it can’t help you explore.

Meta’s Wayfarers can’t answer any questions regarding my calendar or messages because it doesn’t have access to that data. And Siri, who can access those things, can’t be invoked from the glasses.

Apple Fitness doesn’t suggest I head home if I have a meeting soon or it’s about to rain. The data is there, but the contextual domain Fitness cares about is limited to my workout.

It feels like we’re back at the beginning of the Web 2.0 era, before the Cambrian Era of APIs that allowed our tools to talk to each other. Assistant interfaces present themselves as having vast capabilities, yet they stumble if needing anything from another platform.

For Audio AR to take off beyond contextual sessions, we need open standards for interfacing with headphones, sharing contextual information, and invoking voice assistants. Recent updates to Apple’s APIs for AirPods and Android’s Awareness API are steps in the right direction, but we’re still a long way from the ideal foundation. (Hopefully fear of antitrust spurs better habits…)

Until we get to this stable foundation, we’ll be chipping away at the UX problem of Audio AR – delivering the right information at the right time – in specific context sessions.

Our Guy in the Chair

The tech industry has the habit of getting behind new technology use cases when they have a solid pop culture reference. Ready Player One was more valuable as a touchstone for Meta’s VR positioning than it ever was as a movie. The number of big data or business intelligence companies who sold their wares by referencing Minority Report or The Dark Knight is too high to count.

As ChatGPT arrived and AI is having its moment, the tech industry has leaned heavily on Her to bring to life the vision of a conversational AI. And while this might seem fitting to Audio AR, I think we can do better.

People don’t want a conversational relationship with an AI assistant. People want a guy in the chair.

Perhaps the best example of the “guy in the chair” trope is Ving Rhames as Luther Stickell in the Mission Impossible series. Luther is the tech guy who stays back at the base, monitoring the team’s progress and providing them with the information they need to succeed. He’s the one who can see the big picture, who can guide the hero to success. He’s the one who can deliver the right information at the right time, without the hero having to look away from the task at hand.

The assistant in Her is an anomaly. The guy or gal in the chair trope is constant and pervasive in our media. It’s in Mission Impossible, The Matrix, Apollo 13, The Martian, and the Batman, Spiderman, and Avengers series. In videogames it’s a staple, pivotal to the design of Metal Gear Solid, Portal, Half-Life, and Mass Effect. The examples listed on the TV Tropes page are seemingly endless.

We want a guy in the chair, delivering the right information at the right time, without us having to look away.

Audio AR represents a major shift in how we interact with digital information. By moving the interface from our screens to our ears, it allows us to stay heads-up and engaged with the world around us. It has the potential to make technology feel less intrusive, more ambient, and less constrained by the form factors of our devices.

But to get there we need to solve some key interaction challenges. We need open standards for integrating with headphones and understanding context. We need thoughtful design that delivers the right information at the right time.

The enabling technologies are already in place. Headphones, voice interfaces, and AI have reached a level of maturity where Audio AR is practical. And we’re seeing compelling products – not just experiments – like Meta’s Wayfarer glasses.

The age of Audio AR is already here. It’s just a matter of time before it becomes mainstream.